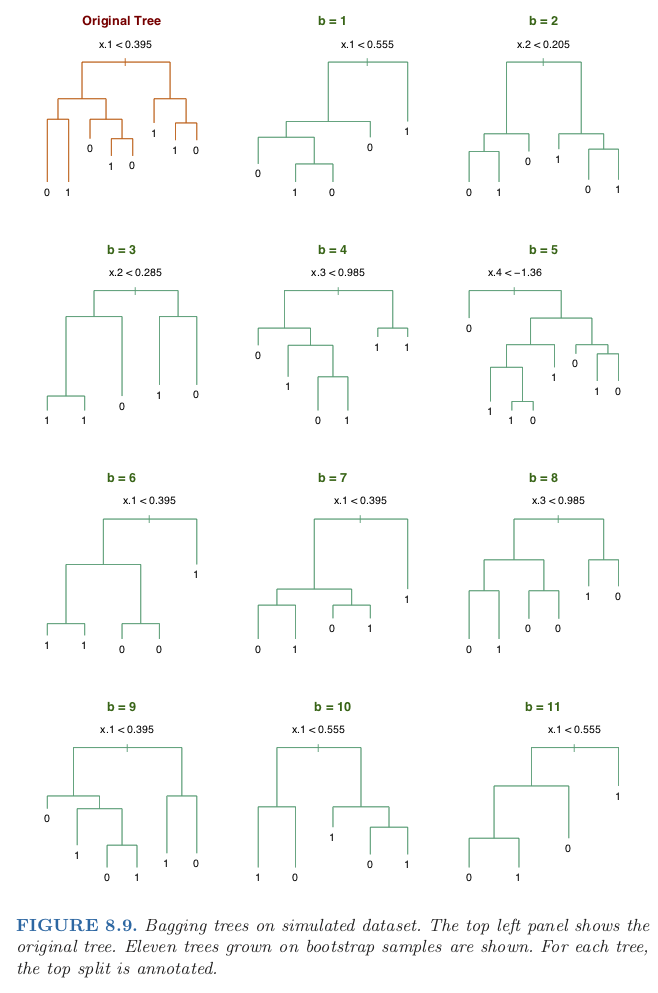

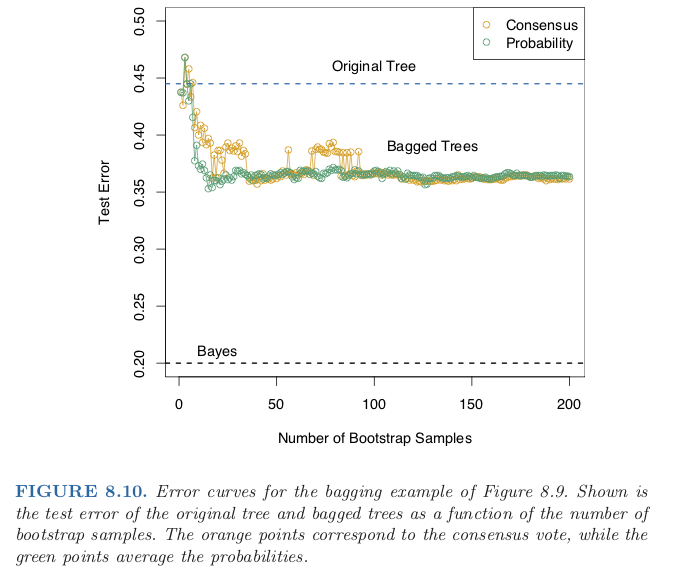

class: center, middle # Bagging CS534 - Machine Learning Yubin Park, PhD --- class: middle, center Recall the bias-variance decomposition for Squared Loss $$ \text{E}[(y-f)^2] = \text{Bias}[f]^2 + \text{Var}[f] + \sigma^2 $$ --- class: middle, center This time, we will decompose a bit differently: $$ \text{E}[(y-f)^2] = \text{E}[((y - \text{E}[f]) + (\text{E}[f] - f))^2]$$ $$ = \text{E}[(y - \text{E}[f])^2] + \text{E}[(\text{E}[f] - f)^2]$$ $$ \ge \text{E}[(y - \text{E}[f])^2] $$ --- class: middle, center Maybe too obvious. If we can make `\(f\)` close to `\(\text{E}[f]\)` the expected loss will be less. But, how? --- ## Bagging (1) Imagine that we know the "real" distribution for the samples: `\((\mathbf{x}_i, y_i)\)` To estimate `\(\text{E}[f]\)`, we would repeat: 1. draw a set of samples 1. estimate `\(f\)` 1. repeat the above as many as possible 1. then aggregate all estimated `\(f\)` The only caveat is that we do not know the "real" distribution. Perhaps, we can "simulate" the real distribution with the samples we have? BTW, What's the difference between 1) sampling from the distribution and 2) sampling from the samples? --- ## Bagging (2) Bagging (Bootstrap Aggregation) works as follows: 1. draw random samples from the data with "replacement" 1. estimate `\(f\)` 1. repeat `\(B\)` number of times then get the final model by averaging as follows: $$ f\_\text{bagged} = \frac{1}{B} \sum\_{b=1}^B f_b$$ --- class: middle, center .figure-500[] .reference[Chapter 8 of [ESLII](https://web.stanford.edu/~hastie/ElemStatLearn/)] --- class: middle, center .figure-500[] .reference[Chapter 8 of [ESLII](https://web.stanford.edu/~hastie/ElemStatLearn/)] --- ## Model Averaging and Stacking One step beyond the simple averaging models: $$ \text{E}[(y - \sum\_{b=1}^B w\_b f\_b)^2] \le \text{E}[(y - \frac{1}{B} \sum\_{b=1}^B f_b)^2] $$ If `\(w_b = \frac{1}{B}\)`, then the both sides are equal. How do we estimate the weights, `\(w_b\)`? We will divide the training set into two parts: - **Training I** for fitting `\(f\_b\)` - **Training II** for estimating `\(w_b\)` This can be viewed as stacking two layers of models, thus Model Stacking. --- class: center, middle ## Questions?