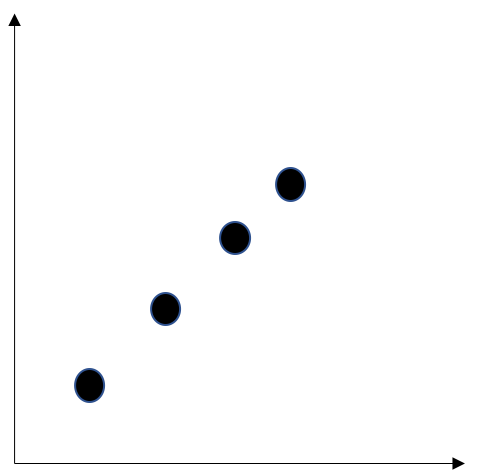

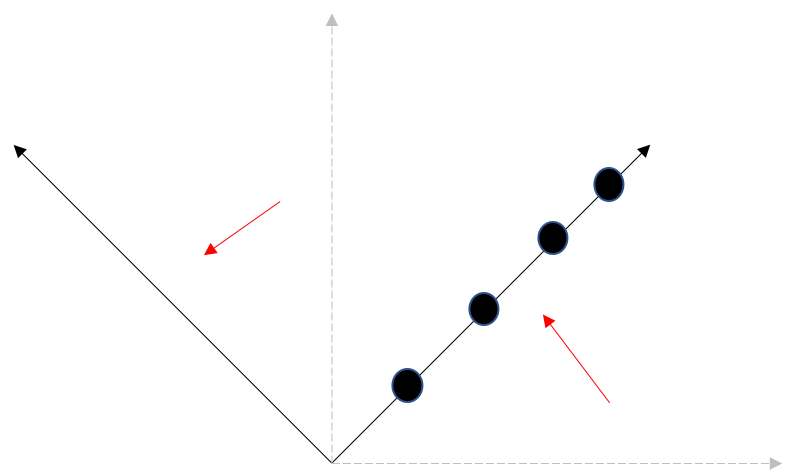

class: center, middle # Dimensionality Reduction CS534 - Machine Learning Yubin Park, PhD --- class: center, middle "Less is more" We want to reduce the dimension of data $$ \mathbf{X} \rightarrow \mathbf{X}^\prime $$ where `\(\text{dim}(\mathbf{X}^\prime) \ll \text{dim}(\mathbf{X})\)` --- class: center, middle with **one condition** $$ \mathcal{L}(\mathbf{y}, f(\mathbf{X})) \approx \mathcal{L}(\mathbf{y}, f(\mathbf{X}^\prime)) $$ i.e. without losing much information from the original data. --- ## Ways to Reduce Dimensions Many approaches exist: - Feature Selection - through manual analysis - through regularization techniques such as Lasso - Clustering - such as [k-means](https://en.wikipedia.org/wiki/K-means_clustering) - Linear Transformation - such as [Principal Component Analysis](https://en.wikipedia.org/wiki/Principal_component_analysis), [Singular Value Decomposition](https://en.wikipedia.org/wiki/Singular_value_decomposition) - Non-linear Transformation - such as [Neural-Net Word Embeddings](https://en.wikipedia.org/wiki/Word_embedding) We will cover the Linear Transformation approach in this lecture. --- class: middle, center .figure-350[] The data seem like distrubuted in 2D, but... --- class: middle, center .figure-350[] If we rotate the coordinates, they are distributed in 1D. --- ## Principal Component Analysis The rotation in the example is a part of "[linear transformation](https://en.wikipedia.org/wiki/Linear_map)". We want to find a linear transformation: $$ f(\mathbf{x}) = \mathbf{\mu} + \mathbf{V}\mathbf{x} $$ that minimizes the Squared Loss function: $$ \sum (\mathbf{x}_i - f(\mathbf{x}_i) )^T(\mathbf{x}_i - f(\mathbf{x}_i) ) $$ --- class: center, middle ## Questions?